Import/export: a common programming scenario

Most data-centric software must deal with some form of import/export of their internal data model to an external data format. In many cases, this external data format is some sort of standard format, or otherwise dictated by external sources, and does not map one-on-one to the internal data model. This was also the case for CareConnect, HealthConnect/Corilus‘ latest Electronic Medical Record software. CareConnect must be able to import/export its data from/to SUMEHR, PMF, and GPSMF documents. On top of this comes that CareConnect’s internal data model consists of some 300 classes, which means there are a lot of mappings to define.

Most data-centric software must deal with some form of import/export of their internal data model to an external data format. In many cases, this external data format is some sort of standard format, or otherwise dictated by external sources, and does not map one-on-one to the internal data model. This was also the case for CareConnect, HealthConnect/Corilus‘ latest Electronic Medical Record software. CareConnect must be able to import/export its data from/to SUMEHR, PMF, and GPSMF documents. On top of this comes that CareConnect’s internal data model consists of some 300 classes, which means there are a lot of mappings to define.

To deal with the size and complexity of this scenario, we decided to break up import/export in a number of ways:

- Use a pivot model for import/export, so we only have to support a single import/export format

- Use a specialised language for translating between our domain model and the pivot model

- Use regular Java (CareConnect is written in Java) to handle file I/O and the database interaction

The pivot model in question was provided by our parent company, Corilus, and we will call it Corilus XML. Corilus provides a service for importing/exporting Corilus XML from/to SUMEHR, PMF, and GPSMF. We only have to worry about Corilus XML import/export now.

We previously used Dozer as a specialised transformation “language”, but Dozer is not meant for arbitrarily complex transformations. It is advertised as a bean mapper, for doing simple one-on-one mappings. Dozer also does not provide a complete programming language, but a simplified configuration language; complex mappings have to be programmed in Java, causing your transformation specification to be scattered between the Dozer language and Java. Unfortunately, Dozer is the most expressive transformation tool that works directly on POJOs. The more expressive transformation tools, such as XSLT, ATL, QVT, Fujaba, Epsilon, Viatra2, work on custom metadata representations, such as XML, EMF, graphs, or trees.

We chose EMF as a metadata representation, because it’s the closest thing to POJOs. In fact, it is possible to reverse-engineer the EMF Ecore metamodel directly from our existing POJOs, and generate the EMF reflective methods back on top of our existing code, as the EMF code generator is able to merge with hand-written code. We implemented a reverse-engineering step based on MoDisco and ATL. MoDisco can represent Java code as a model, and our custom EMiFy.atl transformation filters and translates our entity classes into an EMF Ecore metamodel. From there on it’s standard EMF: configure the EMF code generator in a .genmodel file to suppress interfaces, and put the code in a specific project and folder, and then generate the Model code.

That takes care of our domain model in EMF. Corilus XML comes with an XSD schema, which EMF can directly convert into an Ecore metamodel. We just generated the EMF Model code for Corilus XML, and we were good to go!

ATL/EMFTVM for “online” transformation

As a transformation language, we chose ATL. It is widely used — compared to other EMF-based transformation languages — uses the OCL standard for expressions, and has been around since 2004. However, ATL — and most EMF-based transformation languages — are not designed for “online” transformation. ATL is meant for design-time transformations between models. Enter the EMF Transformation Virtual Machine: EMFTVM is a new runtime for ATL, which adds a number of features that make it more suitable for use within a Java application, such as a JIT compiler and the ability to reuse an existing VM instance for additional transformations.

As a transformation language, we chose ATL. It is widely used — compared to other EMF-based transformation languages — uses the OCL standard for expressions, and has been around since 2004. However, ATL — and most EMF-based transformation languages — are not designed for “online” transformation. ATL is meant for design-time transformations between models. Enter the EMF Transformation Virtual Machine: EMFTVM is a new runtime for ATL, which adds a number of features that make it more suitable for use within a Java application, such as a JIT compiler and the ability to reuse an existing VM instance for additional transformations.

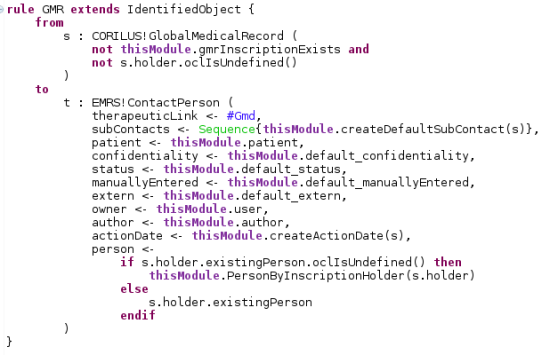

ATL is a mapping language, similar to Dozer. The difference is that ATL uses the notion of a “model” to define the scope of the transformation: only elements inside the input model are transformed. Dozer does not have a scoping mechanism, and just pulls in any referenced object for which a mapping definition exists. Instead, ATL applies its mapping rules top-down for each model element it can find, and uses a two-pass compiler approach to “weave” the elements generated by different rules back together. This frees the programmer from dealing with model traversal and rule execution order: this is taken care of by ATL. Instead, the programmer can focus on defining the mapping between specific input and output elements. This fact has allowed us to distribute the transformation writing over three to four developers. One of these programmers was trained in .NET instead of Java, which turned out not to be a barrier for writing ATL code. To give you an idea, here’s what the ATL rule for mapping the Global Medical Record looks like:

Scaling import/export development

We have already mentioned that the workload of writing the ATL transformation code could be distributed over three to four people, thanks to the nature of ATL mapping rules. Because we also chose to separate our transformation code from our file I/O and database interaction code, another developer could work on the file I/O and database interaction in Java. Finally, the Corilus XML conversion service for SUMEHR, PMF, and GPSMF was taken care of by a separate development team at Corilus HQ, and was based on existing code. The critical path in the development pipeline was therefore made up by the ATL code, which is exactly the kind of workload that can easily be dispersed over multiple developers.

Note that the MoDisco-EMiFy-EMF round-trip scenario for generating the Ecore model and EMF reflective methods was performed for each change to our domain model, and takes about 10 minutes each time. Most of this time was taken up by running MoDisco and the EMF code generator. This takes up extra time from the developer who manages the domain model changes, besides the regular Java code changes and SQL migration code. The positive side of this picture is that the Ecore domain model can also be used reflectively to do dry-run transformations outside of the application codebase. This allows the ATL developers to test each change in isolation.

Technical modifications to EMFTVM

Technically, a few modifications to EMFTVM were required to make it work with EMF-decorated POJOs instead of standard EMF generated code: EMF uses ELists to represents collections, but standard POJOs — and therefore Hibernate — use standard Java Lists and Sets. EMFTVM has been adapted to work with these more general collection types instead of only ELists.

Also, an ExecEnvPool class was added such that multiple server-side import/export sessions could be supported. Each EMFTVM instance (an ExecEnv) is stateful, and cannot be used by another thread or session when already in use. The ExecEnvPool manages creation and pooling of available ExecEnv instances.

Wrap-up

We tackled a complex and common programming scenario such as import/export by breaking it up in three ways:

- Use a pivot model for import/export, so we only have to support a single import/export format

- Use a specialised language for translating between our domain model and the pivot model

- Use regular Java to handle file I/O and the database interaction

Reblogged this on Dinesh Ram Kali..

Pingback: Presenting EMFTVM at www.sdaconference.nl | Dennis Wagelaar's Blog